I installed netdata in a virtual machine running debian 10 for test purposes. The VM has 2 GB Ram configured and it uses around 200MB when running. As soon as I install Netdata it jumps to almost 2Gb consumed. I have seen that Netdata should be a lightweight agent for monitoring. I have uninstalled it and installed back again with no luck on the ram issue. I already tried the tips to decrease this consumption but they dont work.

Maybe is the netdata server side installation and I just need to install the agent?? Sorry, new to Netdata but I really like the product

Hi @Lcarrillo.

As soon as I install Netdata it jumps to almost 2Gb consumed.

That is not expected. How did you check ram usage?

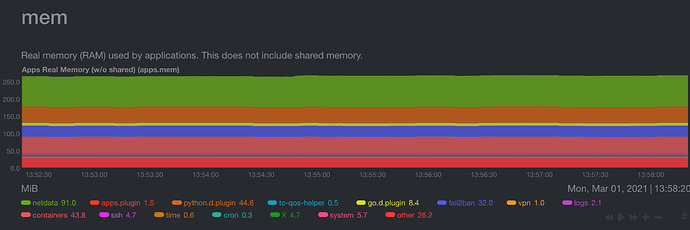

Could you share apps.mem (Applications->mem->apps.mem)?

I have Netdata Agent installed on DO (1 GB ram vm)

In the System Overview–>Ram section it shows that the physical memory being used is 1.75GiB and free is only 0.08 GiB.

I installed another software called Glances to check if the ram was actually consumed and it showed up the same ram being consumed. As soon as I uninstall netdata this problem goes away.

To make it clear - the problem is used: 1.75GB? Why do you think it is cosumed by Netdata?

Can you do when netdata is running

# system free/used memory

free -h

# top 30 memory consumers

ps -eo pmem,pcpu,vsize,pid,cmd | sort -k 1 -nr | head -30

When I ran those commands this is the info i got. Sorry for all this trouble and thanks for all your help.

I believe the netdata go.d.plugin is causing this RAM consumption issue.

It is not go.d.plugin, it uses 12MB (you can see it on the netdata dashboard apps.mem, and in the top output - it is 0.5% (pmem, first column)).

I’m also seeing that the netdata agent is using a lot more ram recently.

I have an Ubuntu server dedicated to an HAproxy instance with 3G ram. Without the netdata agent running, memory usage sits around 600M total; with netdata agent running, memory usage is around 2.3G

Agent documentation says memory usage should be around 100M without database, is that still correct?

$ netdata -v

netdata v1.29.3-172-nightly

$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.2 LTS

Release: 20.04

Codename: focal

$ free -h

total used free shared buff/cache available

Mem: 2.9Gi 2.3Gi 143Mi 1.0Mi 503Mi 393Mi

Swap: 3.0Gi 16Mi 3.0Gi

$ ps -eo pmem,pcpu,vsize,pid,cmd | sort -k 1 -nr | head -10

10.7 18.9 538684 3053127 /usr/sbin/netdata -P /var/run/netdata/netdata.pid -D

3.0 0.0 285316 488 /lib/systemd/systemd-journald

1.1 9.8 55252 3053328 /usr/bin/python3 /usr/libexec/netdata/plugins.d/python.d.plugin 1

0.9 0.8 144132 969 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

0.9 0.0 141948 967 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

0.7 3.5 725444 3053333 /usr/libexec/netdata/plugins.d/go.d.plugin 1

htop shows netdata is only using 10.9%, but similar to OP the memory usage drops from 2.3G to 600M when I run systemd stop netdata

I just checked my other HAproxy server and it is only using ~50M on the same netdata version. This server is configured very similarly - only main difference is it only has 2G ram.

$ netdata -v

netdata v1.29.3-172-nightly

$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.2 LTS

Release: 20.04

Codename: focal

$ free -h

total used free shared buff/cache available

Mem: 1.9Gi 503Mi 1.2Gi 0.0Ki 228Mi 1.3Gi

Swap: 2.0Gi 371Mi 1.6Gi

$ ps -eo pmem,pcpu,vsize,pid,cmd | sort -k 1 -nr | head -10

2.6 1.6 564284 564164 /usr/sbin/netdata -P /var/run/netdata/netdata.pid -D

1.3 0.2 71396 564324 /usr/bin/python3 /usr/libexec/netdata/plugins.d/python.d.plugin 1

0.9 0.5 129876 504 /lib/systemd/systemd-journald

0.9 0.0 345824 764 /sbin/multipathd -d -s

0.6 0.6 1227108 899 /usr/lib/snapd/snapd

0.6 0.1 725444 564329 /usr/libexec/netdata/plugins.d/go.d.plugin 1

@ilyam8 Both of these servers are now having the high memory usage issue ![]()

I found this after looking through open Github issues, but I’m not sure if it’s related because I do not have metrics streaming to a central netdata server High RAM usage by netdata-master · Issue #10471 · netdata/netdata · GitHub

Hi @haydenseitz

-

What is your retention storage setting

dbengine multihost disk space(should be in/etc/netdata/netdata.confsection global) -

How many metrics are you collecting?

Should be at the very bottom of the output when runninghttp://localhost:19999/api/v1/charts

Similar to :

"charts_count": 386,

"dimensions_count": 2567,

"alarms_count": 95,

"rrd_memory_bytes": 2998876,

"hosts_count": 5,

Also could you share the output of http://localhost:19999/api/v1/chart?chart=system.cpu

Retention is set to roughly 7 days:

[global]

dbengine multihost disk space = 2048

Most of my installations have around 2000 metrics. Here is one having the issue:

...

"charts_count": 337,

"dimensions_count": 2436,

"alarms_count": 48,

"rrd_memory_bytes": 2700032,

"hosts_count": 1,

...

I have been switching installations to the stable branch and I have not seen the high memory issue yet when running version netdata v1.29.3

@Stelios_Fragkakis I am seeing the high memory usage behavior in netdata stable 1.30.0 now. A freshly installed Ubuntu 20 server goes from 500mb memory usage to 1.8gb memory usage when starting the netdata agent.

Do you think it could be related to the issue here? High RAM usage by netdata-master · Issue #10471 · netdata/netdata · GitHub

Thanks for your help on this!

This does not seem to be related. Investigating again changes from v1.29.3-172-nightly onwards to try and pinpoint this.

@haydenseitz to help us investigate the problem, can you send a snapshot to ilya@netdata.cloud?

The dbengine metadata usage looks normal (pages on disk ~2.7 million)

Thanks @Stelios_Fragkakis and @ilyam8 for looking into this. Perhaps it would be useful to post here any findings, maybe a contributor get’s inspired and help us debug it even faster ![]()

Something I don’t fully understand is that tools like htop still only show netdata using ~500Mb virtual memory and ~300Mb resident memory, but the memory usage changes >1.5Gb when starting the netdata agent. Are there any other tools I can use to track down exactly which process is filling up memory?

While running:

Summary

$ ps aux | head -n1; ps aux | grep netdata

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

netdata 2103535 6.0 8.3 586400 333792 ? Ssl 08:44 0:02 /usr/sbin/netdata -P /var/run/netdata/netdata.pid -D

netdata 2103545 0.0 0.0 26864 2312 ? Sl 08:44 0:00 /usr/sbin/netdata --special-spawn-server

netdata 2103924 0.1 0.0 4108 3280 ? S 08:44 0:00 bash /usr/libexec/netdata/plugins.d/tc-qos-helper.sh 1

netdata 2103928 1.2 0.5 724168 23300 ? Sl 08:44 0:00 /usr/libexec/netdata/plugins.d/go.d.plugin 1

netdata 2103931 3.5 0.1 56152 6308 ? R 08:44 0:01 /usr/libexec/netdata/plugins.d/apps.plugin 1

root 2103933 5.6 0.0 380172 3820 ? Sl 08:44 0:02 /usr/libexec/netdata/plugins.d/ebpf.plugin 1

netdata 2103935 1.7 0.6 48356 27976 ? Sl 08:44 0:00 /usr/bin/python /usr/libexec/netdata/plugins.d/python.d.plugin 1

$ free -m

total used free shared buff/cache available

Mem: 3907 2883 256 7 767 745

Swap: 3907 98 3809

While stopped:

Summary

$ sudo systemctl stop netdata

$ free -m

total used free shared buff/cache available

Mem: 3907 1304 1841 7 761 2325

Swap: 3907 98 3809

Yeah, that keeps me wondering too ![]()

Let’s try to disable external plugins one by one and see if it changes anything (see netdata.conf [plugins] section)

@haydenseitz here are the docs to disable plugins:

Thank you for helping us figure this out!

thanks, I’ll try disabling and see if I find a suspect.

Another oddity is sometimes I’ll stop and restart the agent and memory usage stays at the expected amount. But at some point, usually just a day or two later, it will jump up.